Introduction to Kubernetes

Introduction to Kubernetes is a presentation giving attendees a thorough understanding of the fundamentals of Kubernetes.

whoami

James Strong

Technical Principal @ Contino

Certified Kubernetes Admin

Contino

Agenda

Introduction

Containers

Kubernetes

Kubernetes Objects

Extras

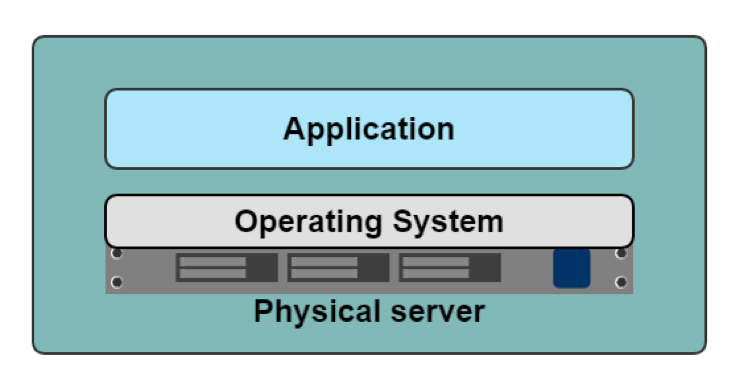

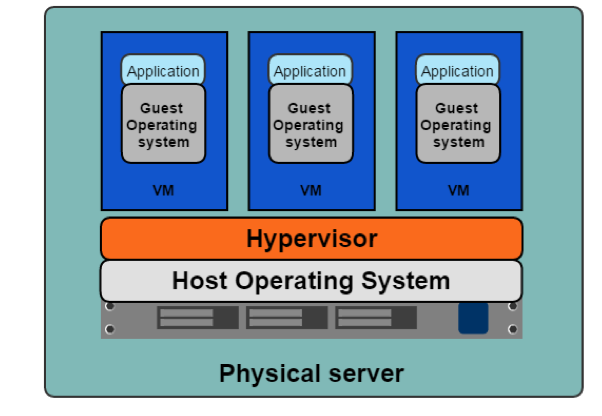

History

In the beginning

The Hypervisor

2007

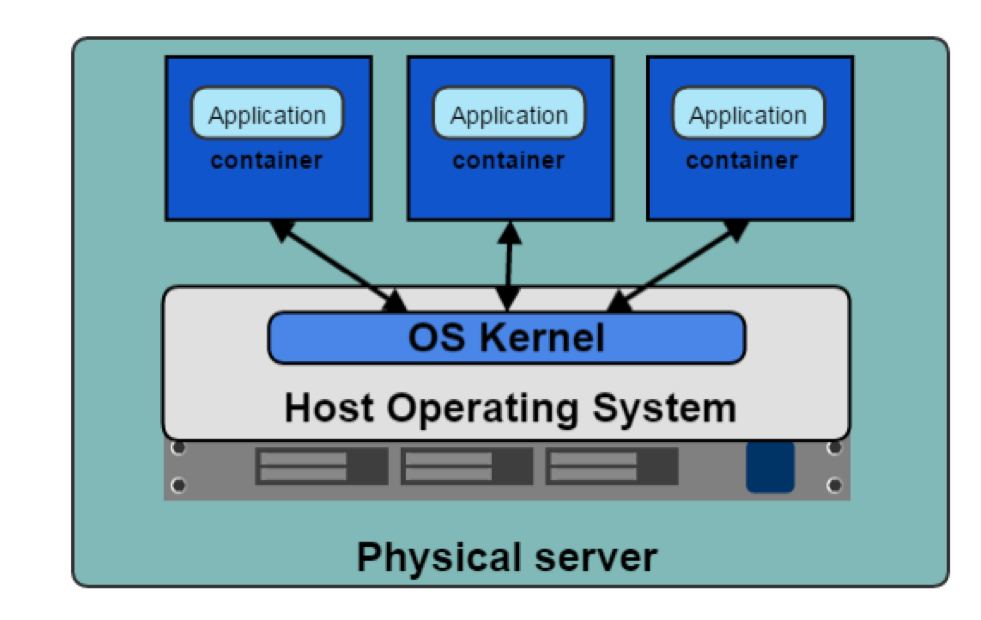

Container Primitives

- Control Groups

- Namespaces

Control Groups

Abbreviated cgroups, is a Linux kernel feature that limits, accounts for, and isolates the resource usage

- CPU

- memory

- disk I/O

- network

Namespaces

A feature of the Linux kernel that isolate and virtualize system resources of a collection of processes. Examples of resources that can be virtualized include:

- process IDs

- hostnames

- user IDs

- network access

- interprocess communication

- filesystems

Containers

Rise of the Containers (runtimes)

- lxc

- Docker ( containerd )

- rkt

- runc

- lmctfy

- cri-o

The orchestrator

2010

Mesosphere

2013

Docker Released

.1 release of Docker Compose

2014

Kubernetes released

Rancher Release 1.0 supports Docker Swarm, Meso and Kubernetes Docker container runtime

2016

Mesophere supports Docker, rkt, and appc for container runtimes

2017

Mesophere add supports for Kubernetes

2018

Docker EE 2.0 has support for the Kubernetes orchestrator

Containers Benefits

- Separation of concerns

- Developers focus on building their apps

- System admins focus on deployment

- Fast development cycle

- Application portability

- Build in one environment, ship to another

- Scalability

- Easily spin up new containers if needed

Dockerfile

- Instructions specify what to do when building the image

- FROM instruction specifies what the base image should be

- RUN instruction specifies a command to execute

- Comments start with “#”

- Remember, each line in a Dockerfile creates a new layer if it changes the state of the image

- You need to find the right balance between having lots of layers created for the image and readability of the Dockerfile

Dockerfile

- Don’t install unnecessary packages

- One ENTRYPOINT per Dockerfile

- Combine similar commands into one by using “&&” and “\”

- Use the caching system to your advantage

- The order of statements is important

- Add files that are least likely to change first and the ones most likely to change last

Kubernetes

Kubernetes History

Kubernetes is an open-source system for automating deployment, scaling, and management of containerized applications.

Kubernetes History

- Kubernetes is heavily influenced by Google’s Borg system

- Released in 2014 when Google partnered with Linux foundation to form CNCF

- Often called K8s which is a Numeronym

- K[ubernete]s → K[8]s → K8s

- Kubernetes - Greek for helmsman or pilot

- Kubernetes v1.0 was released on July 21, 2015

Kubernetes History

“Kubernetes was built to radically change the way that applications are built and deployed in the cloud. Fundamentally, it was designed to give developers more velocity, efficiency, and agility”

Kelsey Hightower, Brendan Burns & Joe Beda -Kubernetes Up and Running Book

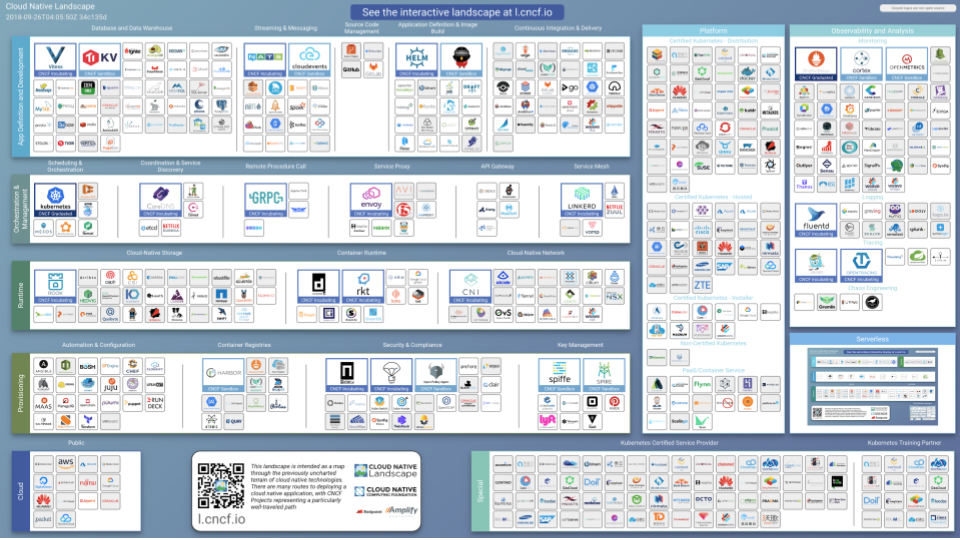

CNCF Landscape

CNCF Landscape

CNCF Landscape

CNCF Involvement

Slack

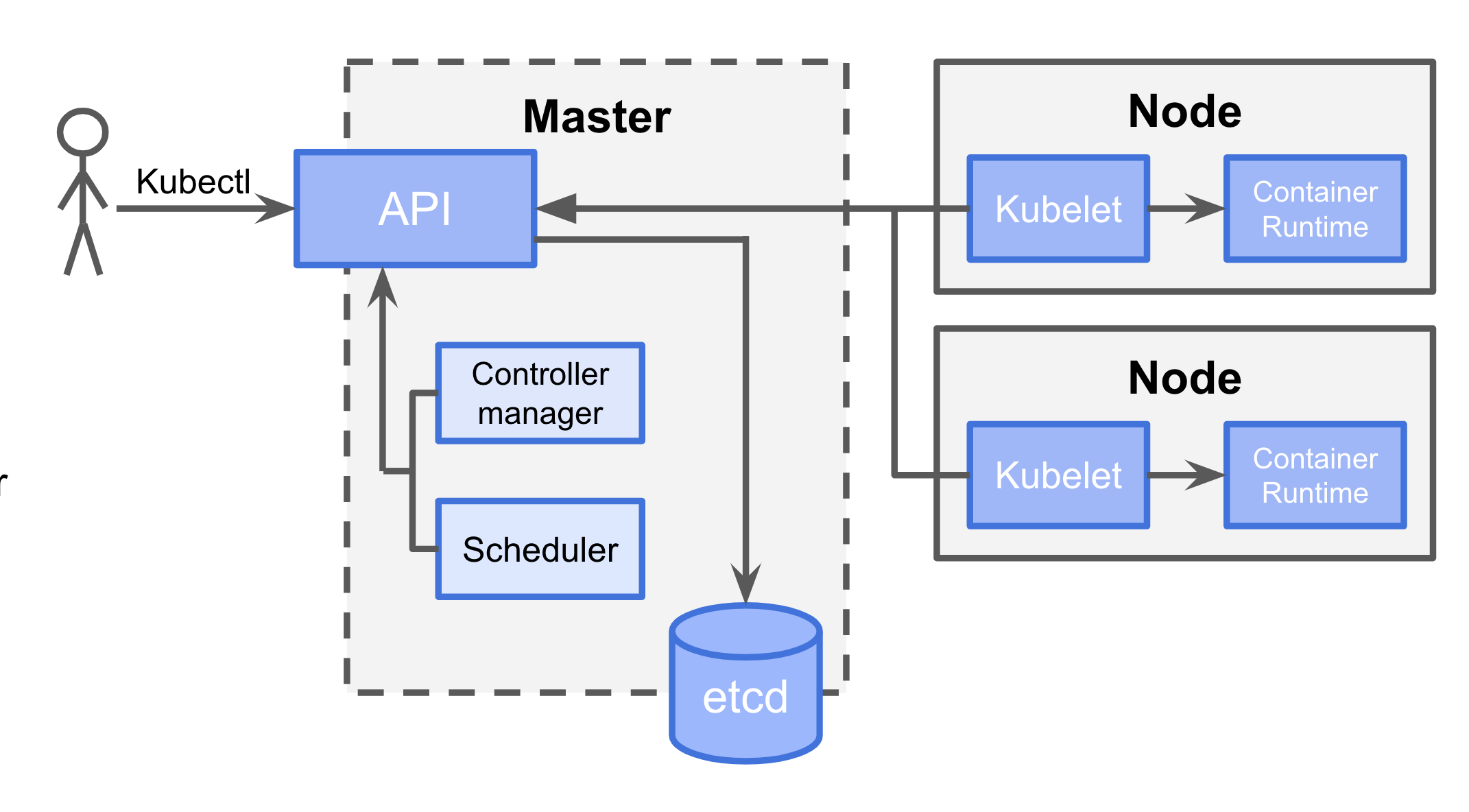

High level Architecture

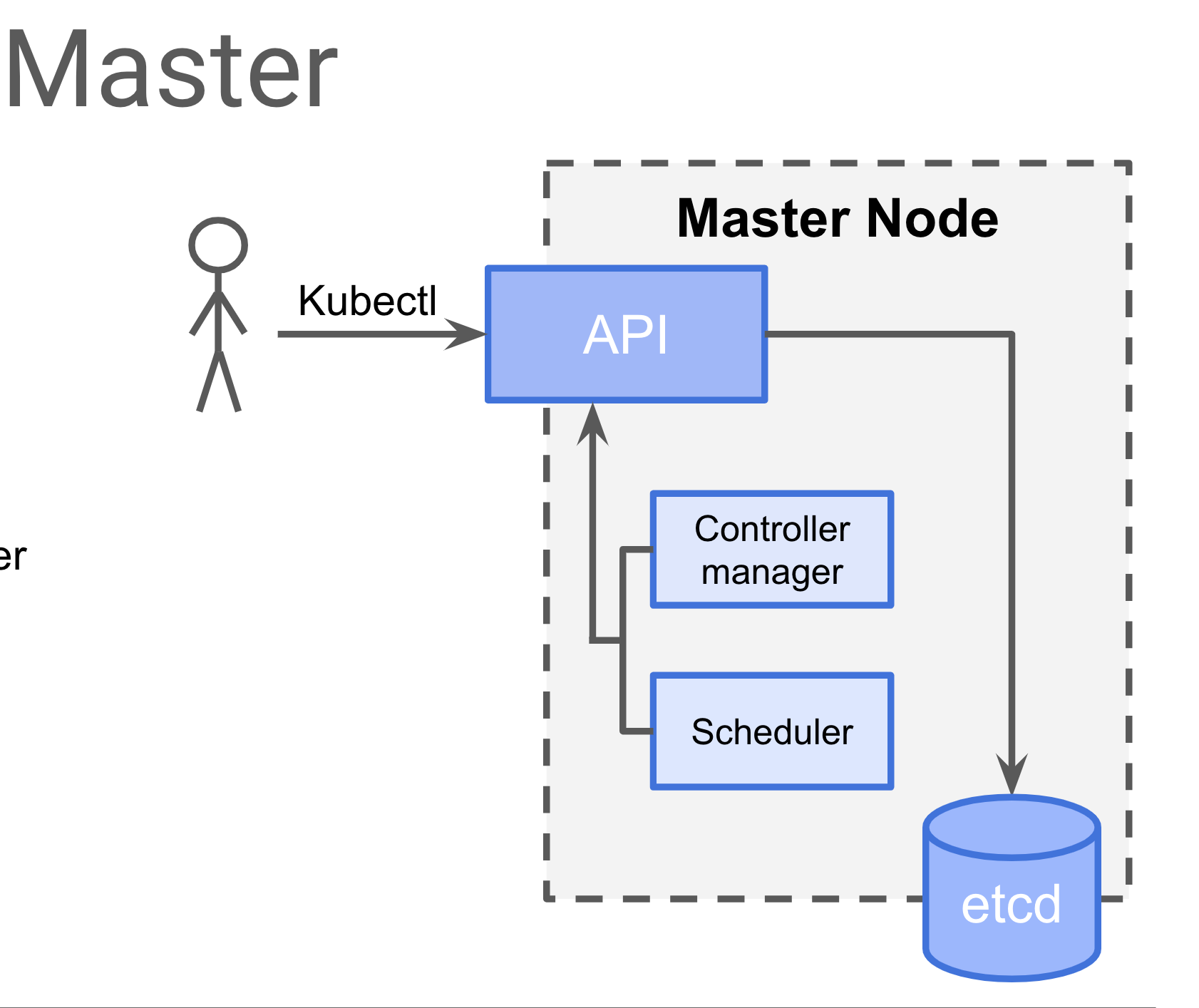

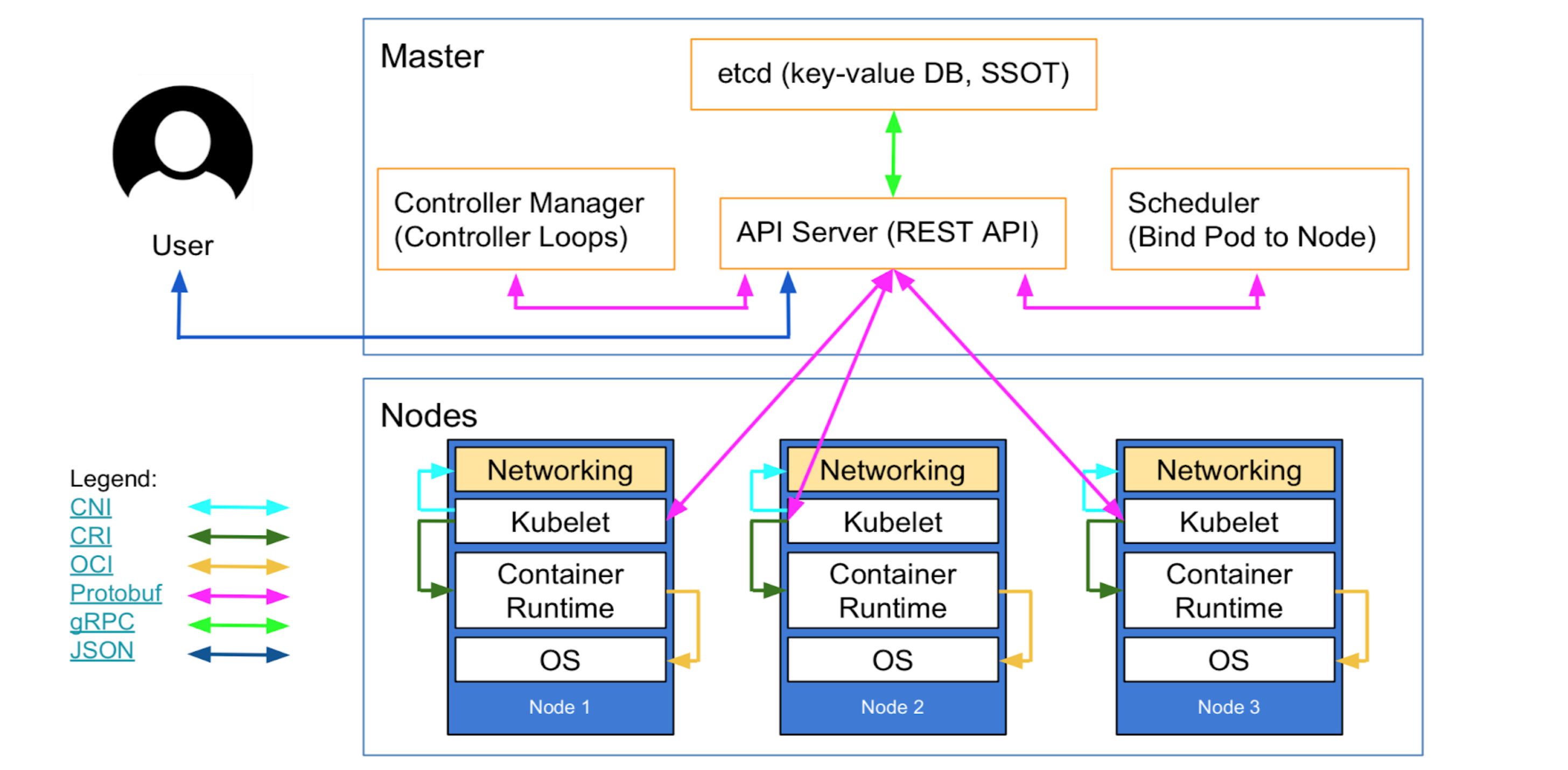

Master Node

Master Node

API data store: Etcd (Cluster State)

Controller Managers :

- Node Controller

- Deployment Controller

- ReplicaSet Controller

- Replication Controller

- Endpoints Controller

- Service Account & Token Controller

Scheduler: Bind pod to Node

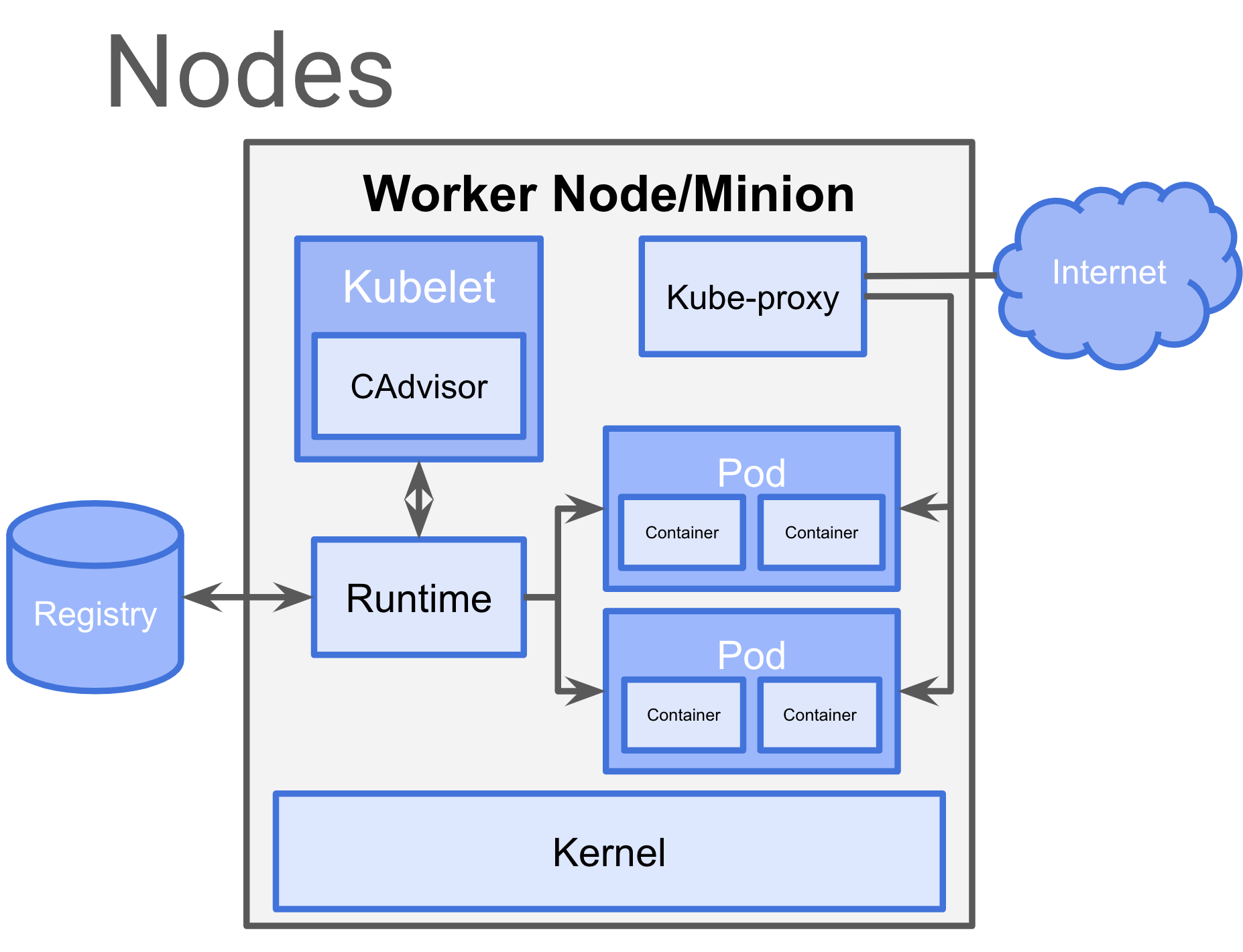

Worker Node

Worker Node

Kubelet:

- cAdvisor (metrics, logs…)

Container Runtime:

- docker

Pod:

- Container (one or more)

Kube-proxy:

- Used to reach services and allow communication between Nodes.

Data Flow

CNI: Network Plugin in Kubelet that allows to talk to networking to get IPs for Pods and Services.

gRPC: API to communicate API Server to ETCD, Controller Manager and Scheduler

Kubelet - all K8s nodes have a kubelet that ensures that any pod assigned to it are running and configured in the desired state.

CRI(Container Runtime Interface) gRPC API compiled in kubelet which allows to kubelet to talk to container runtimes by using gRPC API.

The Container Runtime provider has to adapt it to CRI API to allow kubelet to talk to them by using OCI Standard (runc) Initially, Kubernetes was built on top of Docker as the container runtime. Soon after, CoreOS announced the rkt container runtime and wanted Kubernetes to support it, as well. So, Kubernetes ended up supporting Docker and rkt, although this model wasn’t very scalable in terms of adding new features or support for new container runtimes.

CRI consists of a protocol buffers and gRPC API, and libraries,

Data Flow

Kubernetes API Objects

Namespaces

Namespaces

Namespaces are virtual clusters inside your Kubernetes cluster that provide logically isolation (kinda) from each other.

Scope of names

Organization of Kubernetes resources

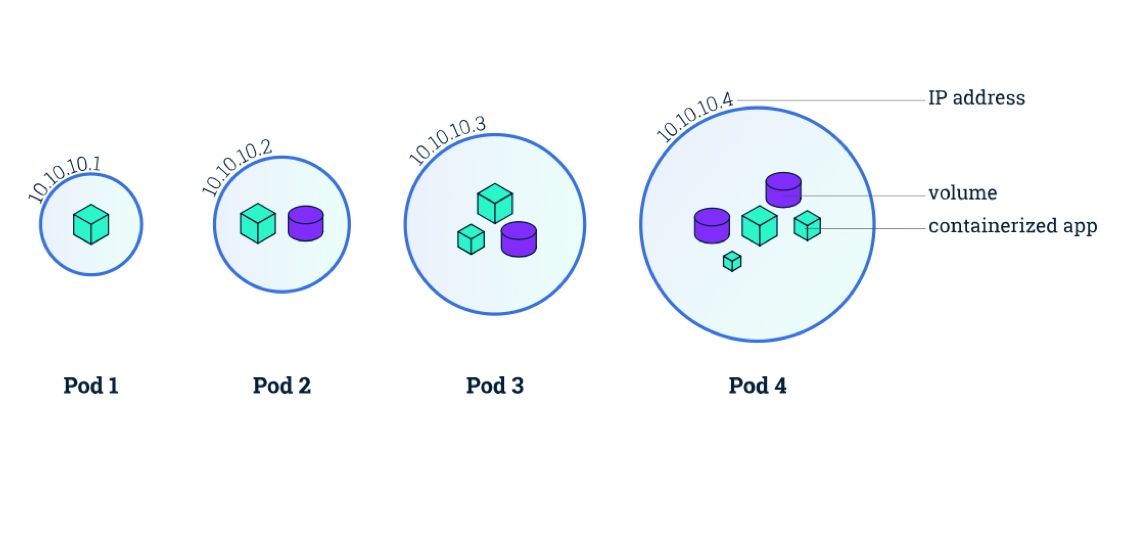

Pods

Pods

Pods are a collection of containers that share a namespace, are colocated and scheduled together on Kubenetes nodes.

A pod is a group of one or more containers, with shared storage/network, and a specification for how to run the containers

Pods

Labels

Labels are key/value pairs that are attached to objects, such as pods that help to identify that object.

Selectors

Label Selectors help client/user identify a set of objects.

spec:

selector:

matchLabels:

app: mysql

strategy:

type: Recreate

template:

metadata:

labels:

app: mysql

Demo

Create labels & use selector to identify set of objects

Resource Quotas

Resource Quotas

Requests - How much does this pod need to run

Limits - This pod only gets this much to run

Kubernetes being a multi-tenant environment, some applications may hog resources and starve others, Resource Quotas discourage this behavior

Resources

- CPU

- Memory

- Storage

- requests.storage

- persistentvolumeclaims

- storage-class-name.storageclass.storage.k8s.io/requests.storage

- storage-class-name.storageclass.storage.k8s.io/persistentvolumeclaims

Resources

- Object Count

- configmaps

- persistentvolumeclaims

- pods

- replicationcontrollers

- resourcequotas

- services

- services.loadbalancers

- services.nodeports

- secrets

- Priority - low, medium, high

Controllers

In Kubernetes, a controller is a control loop that watches the shared state of the cluster through the apiserver and makes changes attempting to move the current state towards the desired state. There are several in the Kubernetes Architecture that support different functions in the system.

Namespace controller - Creates and updates the Namespaces in kubernetes

Serviceaccounts controller - Manages the service accounts in the system, which are for processes to interact with Kubernetes.

Node Controller - Responsible for noticing and responding when nodes go down. Service Account & Token Controllers: Create default accounts and API access tokens for new namespaces.

Deployment Controller - A Deployment controller provides declarative updates for Pods and ReplicaSets.

Replication Controller - Responsible for maintaining the correct number of pods for every replication controller object in the system.

Endpoints Controller - Populates the Endpoints object (that is, joins Services & Pods). When services are created, the Endpoint controller manages the connection between services and the pods back the service.

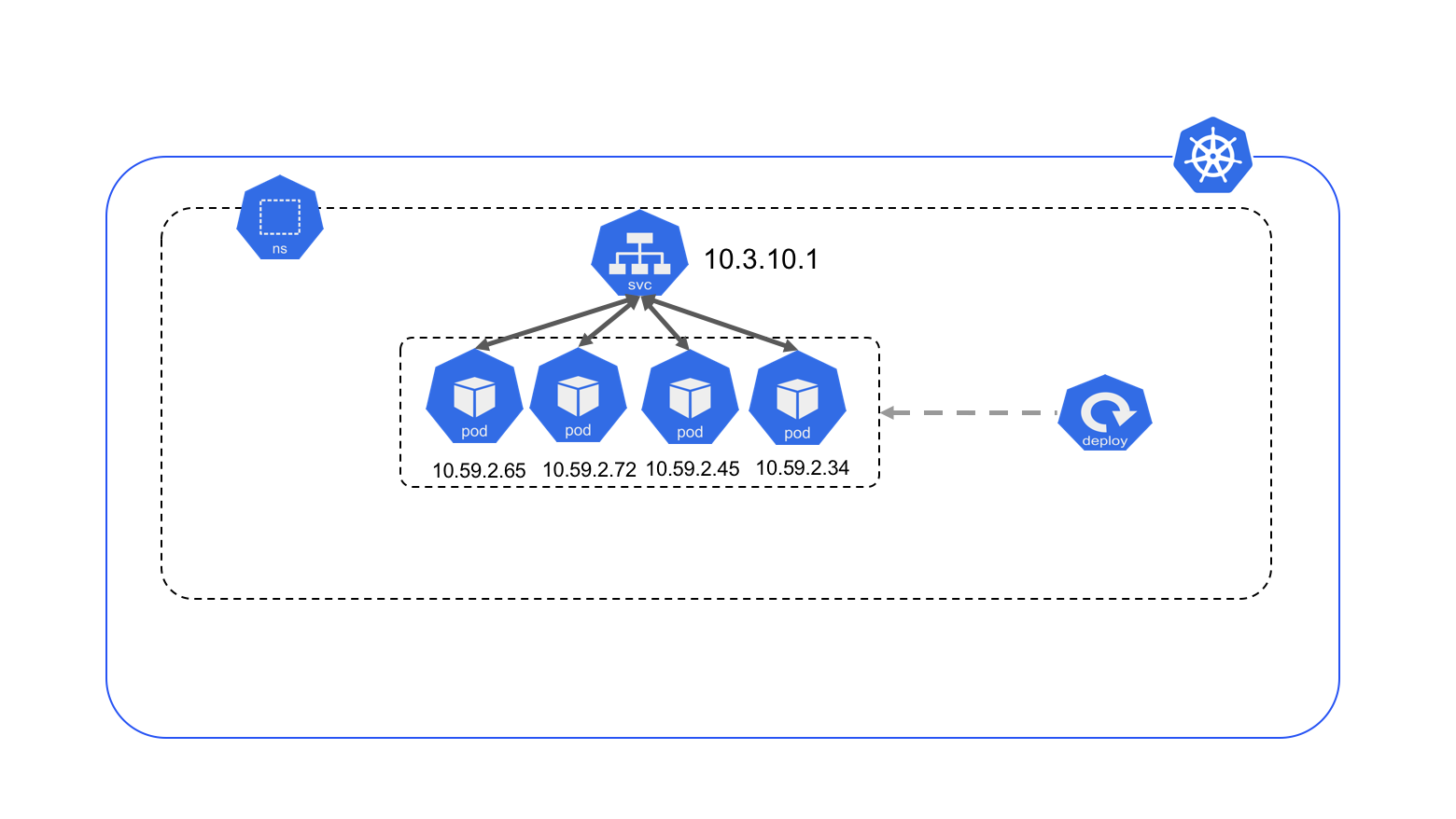

Deployments

- Scaling

- Rolling

ReplicaSet

- Desired state

Strategies

- Recreate

- RollingUpdate (default)

- Blue/Green

- Canary

- A/B Testing

Storage

Storage

Storage like compute is another resource that must be managed. Kubernetes offers 3 types of storage

- Volumes

- Persistent Volumes

- Persistent Volume Claims

The Ephemeral nature of pods and containers lead to the need for data to be have a decoupled lifecycle outside of containers and pods.

Storage Classes

Storage classes allow cluster administrators to provide varing levels of support and types of storage to applications in a cluster

Example: Storage class that will provision an AWS EBS Volumes when referenced a PVC

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: standard

provisioner: kubernetes.io/aws-ebs

parameters:

type: gp2

reclaimPolicy: Retain

mountOptions:

- debug

volumeBindingMode: Immediate

Volume Types

Several volume types are supported

- awsElasticBlockStore

- azureDisk

- gcePersistentDisk

- hostPath

- secret

- configmaps

Volume Types Example

awsElasticBlockStore example yaml:

apiVersion: v1

kind: Pod

metadata:

name: test-ebs

spec:

containers:

- image: k8s.gcr.io/test-webserver

name: test-container

volumeMounts:

- mountPath: /test-ebs

name: test-volume

volumes:

- name: test-volume

# This AWS EBS volume must already exist.

awsElasticBlockStore:

volumeID: <volume-id>

fsType: ext4

Persistent Volumes

Persistent Volumes (PV’s) are a piece of storage provisioned in a cluster and can be used/reference in the cluster like another other resource.

Provisioning - Static or Dynamic

Types of PV’s

- GCEPersistentDisk

- AWSElasticBlockStore

- AzureFile

- CephFS

Example

kind: PersistentVolume

apiVersion: v1

metadata:

name: task-pv-volume

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/mnt/data"

Persistent Volume Claims

Persistent Volume Claims (PVC’s) - Allow pods to requests and attache Persistent Volumes available in the cluster.

When used in with Dynamic provision and Storage Classes, PVC’s can automatically make storage available on demand.

Types of PVC’s

- GCEPersistentDisk

- AWSElasticBlockStore

- AzureFile

- CephFS

Example

kind: Pod

apiVersion: v1

metadata:

name: mypod

spec:

containers:

- name: myfrontend

image: nginx

volumeMounts:

- mountPath: "/var/www/html"

name: mypd

volumes:

- name: mypd

persistentVolumeClaim:

claimName: myclaim

Storage Demo

Create a persistent volume and claim

kubectl apply -f mysql-pv.yaml

Create a pod that will use it.

kubectl apply -f mysql-pod.yaml

Clean up

kubectl delete -f mysql-pv.yaml

kubectl delete -f mysql-pod.yaml

Secrets

Secrets

Kubernetes object to inject sensitive data into containers

Sensitive data should never be built into the container image, in order to do that, kubernetes offers Secrets.

Secrets

echo -n 'admin' | base64

YWRtaW4=

echo -n '1f2d1e2e67df' | base64

MWYyZDFlMmU2N2Rm

Write a Secret that looks like this:

apiVersion: v1

kind: Secret

metadata:

name: mysecret

type: Opaque

data:

username: YWRtaW4=

password: MWYyZDFlMmU2N2Rm

Secrets ENV

apiVersion: v1

kind: Pod

metadata:

name: secret-env-pod

spec:

containers:

- name: mycontainer

image: redis

env:

- name: SECRET_USERNAME

valueFrom:

secretKeyRef:

name: mysecret

key: username

- name: SECRET_PASSWORD

valueFrom:

secretKeyRef:

name: mysecret

key: password

restartPolicy: Never

Secrets File

apiVersion: v1

kind: Pod

metadata:

name: mypod

spec:

containers:

- name: mypod

image: redis

volumeMounts:

- name: foo

mountPath: "/etc/foo"

readOnly: true

volumes:

- name: foo

secret:

secretName: mysecret

Configmaps

Configmaps

In order to keep the immutablity of a docker image, the configuration must live outside the container image, K8 config maps enable this.

Secrets, env variables, and other environment specific items should not be baked into a container image.

Configmaps

Yaml

apiVersion: v1

data:

game.properties: |

enemies=aliens

lives=3

enemies.cheat=true

enemies.cheat.level=noGoodRotten

secret.code.passphrase=UUDDLRLRBABAS

secret.code.allowed=true

secret.code.lives=30

ui.properties: |

color.good=purple

color.bad=yellow

allow.textmode=true

how.nice.to.look=fairlyNice

kind: ConfigMap

metadata:

name: game-config

namespace: default

Configmaps

Environment Vars

apiVersion: v1

kind: Pod

metadata:

name: dapi-test-pod

spec:

containers:

- name: test-container

image: k8s.gcr.io/busybox

command: [ "/bin/sh", "-c", "echo $(SPECIAL_LEVEL_KEY) $(SPECIAL_TYPE_KEY)" ]

env:

- name: SPECIAL_LEVEL_KEY

valueFrom:

configMapKeyRef:

name: special-config

key: SPECIAL_LEVEL

- name: SPECIAL_TYPE_KEY

valueFrom:

configMapKeyRef:

name: special-config

key: SPECIAL_TYPE

restartPolicy: Never

Configmaps

Files from Volume mounts

apiVersion: v1

kind: Pod

metadata:

name: dapi-test-pod

spec:

containers:

- name: test-container

image: k8s.gcr.io/busybox

command: [ "/bin/sh", "-c", "ls /etc/config/" ]

volumeMounts:

- name: config-volume

mountPath: /etc/config

volumes:

- name: config-volume

configMap:

name: special-config

restartPolicy: Never

Ingress

Ingress

Ingress is a K8 object that allows external access to resources inside the cluster

Services, Pods and other objects are only accessible inside the cluster

Ingress Controllers

- In order for the ingress resource to work, the cluster must have an ingress controller running.

- This is unlike other types of controllers, which run as part of the kube-controller-manager binary, and are typically started automatically with a cluster.

- Choose the ingress controller implementation that best fits your cluster.

Ingress Controller

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: test-ingress

spec:

backend:

serviceName: testsvc

servicePort: 80

Ingress controllers

Kubernetes supported Ingress Controllers:

Others that can be deployed:

Full list is here

Ingress Rules

Each http rule contains the following information:

- Host

- list of paths

- Backend service

Ingress Rule

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: simple-fanout-example

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- host: foo.bar.com

http:

paths:

- path: /foo

backend:

serviceName: service1

servicePort: 4200

- path: /bar

backend:

serviceName: service2

servicePort: 8080

Services

Service

Service: a named abstraction of software service, consisting of a port that the proxy listens on, and the selector that determines which pods will answer requests.

Pods come and go, and with that their IP address change rapidly. Services decouple the IP address from the application and serve as the IP address inside the cluster for an application running multiple pods.

More info here

Service

Application Deployment

Create a secret for the password between Wordpress and MYSQL

kubectl create secret generic mysql-pass --from-literal=password=YOUR_PASSWORD

Verify it is there

kubectl get secrets

Application Deployment

Deploy the service for mysql

kubectl apply -f mysql-service.yaml

Verify the service has endpoints.

kubectl get services -o wide

Application Deployment

Pod Deployment with health checks, PersistentVolume and claim

Since we have created the mysql pod several times, here is a yaml file that creates it all.

Deploy mysql

kubectl apply -f mysql-all.yaml

Verify mysql deployed properly

kubectl get deploy

Application Deployment

Deploy the application that will use mysqld

kubectl apply -f app.yaml

Verify Service

kubectl get services wordpress

Clean up

kubectl delete -f mysql-all.yaml

kubectl delete -f mysql-service.yaml

kubectl delete -f app.yaml

Health Checks

Health Checks

Healthchecks inform that kubelet that pods are ready to accept traffic

The distributed nature of kubernetes allows pods to come and go for a number of reasons, and if many are running a application the kubelet needs to know what a “healthy” pod looks like.

Readiness and Liveliness

Liveliness

Liveliness checks inform the kubelet that the pod is running. If this check fails the kubelet will attempt to restart the pod.

Liveliness

apiVersion: v1

kind: Pod

metadata:

labels:

test: liveness

name: liveness-exec

spec:

containers:

- name: liveness

image: k8s.gcr.io/busybox

args:

- /bin/sh

- -c

- touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600

livenessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 5

periodSeconds: 5

Readiness and Liveliness

Readiness

Readiness checks let the kubelet know that the pod is ready to receive traffic. For example if this check fails the Service or Load balancer does send traffic to that pod.

Readiness

readinessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 5

periodSeconds: 5

Daemonsets

Daemonsets

Specialized deployments that will deploy pods on every node in the cluster

- Running a cluster storage daemon, such as glusterd, ceph, on each node.

- Running a logs collection daemon on every node, such as fluentd or logstash.

- Running a node monitoring daemon on every node,

- Prometheus Node Exporter

- collectd

- Dynatrace OneAgent

- AppDynamics Agent

- Datadog agent

Microservice Example

Git clone Micro Service Exercises

Running Kubernetes

- GKP

- EKS

- AKS

- On prem

Extras

- Monitoring

- Logging

- Security

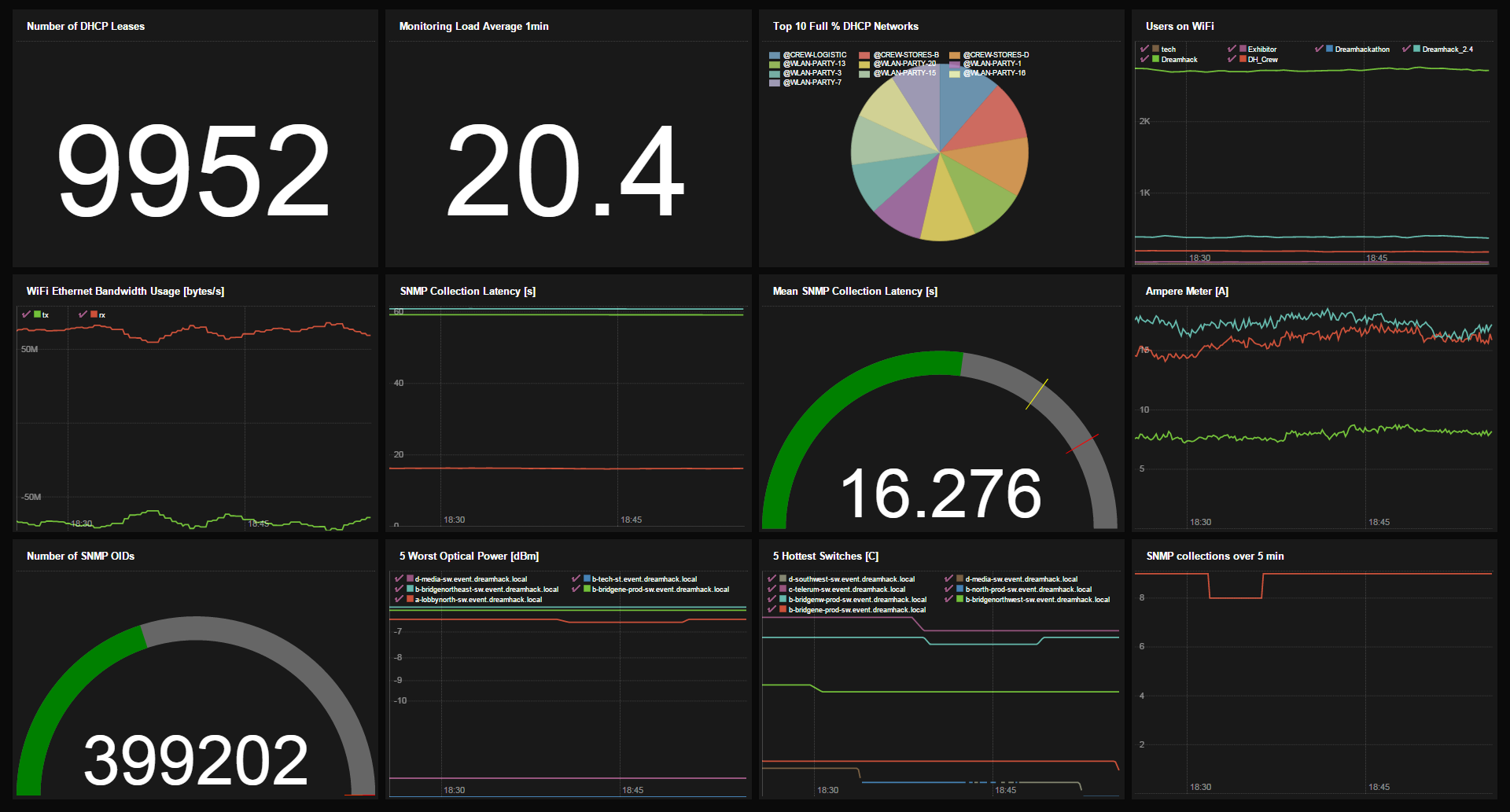

Monitoring

Monitoring

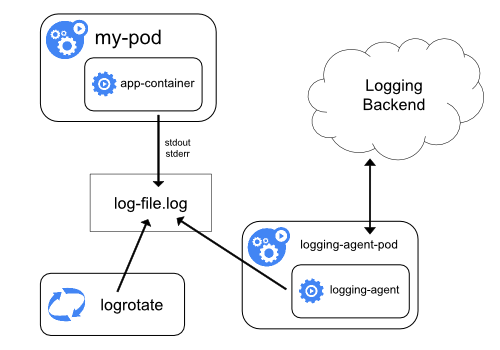

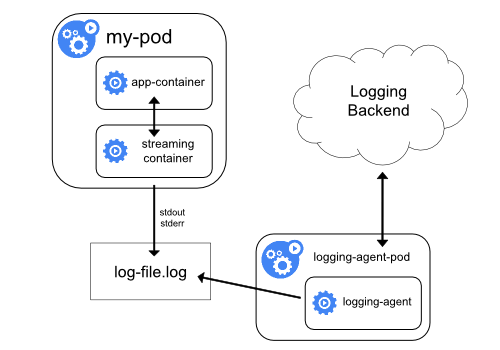

Logging

- kubectl logs

- Node level

- Cluster Level

- Side Car

Logging

- kubectl logs

apiVersion: v1

kind: Pod

metadata:

name: counter

spec:

containers:

- name: count

image: busybox

args: [/bin/sh, -c,

'i=0; while true; do echo "$i: $(date)"; i=$((i+1)); sleep 1; done']

$ kubectl logs counter

0: Mon Jan 1 00:00:00 UTC 2001

1: Mon Jan 1 00:00:01 UTC 2001

2: Mon Jan 1 00:00:02 UTC 2001

Node Level

Side Car

Container security primitives

- SElinux

- AppArmor

- Seccomp

Container Pipeline

- Establish a pipeline to build a standard image

- Have a versioning policy

- Allow to only run images based of the standard image

- Use the same OS as the host

- Keep the image small

- Use a private registry

- Don’t embed secrets into images, use Hashicorp Vault

- https://www.cisecurity.org/benchmark/docker/

- https://github.com/docker/docker-bench-security

K8 Security

- RBAC

- NetworkPolicy

- TLS

- Image Scanning

- Aquasec/Twistlock

- Integrating with HashiCorp Vault other public cloud secret stores

- Investigate using a container based OS (CoreOS, Atomic Linux)

- Harden and tweak

- Make sure to pass https://github.com/dev-sec/linux-baseline

Security Vendors

- Aquasec https://www.aquasec.com/

- Twistlock https://www.twistlock.com/

- Sysdig Falco https://www.sysdig.org/falco/

Closing remarks

Contact Me:

Email: james.strong@contino.io